These days, it’s become popular to talk about “influentials” — people who are so charismatic and well-connected that they can start or accelerate trends. It was one of the big ideas in Malcolm Gladwell’s The Tipping Point, and it positively captivates marketers: The entire concept of “viral” marketing is based on the idea that if you get a cool product or idea into the right people’s hands, those elite folk will tip the product into a nationwide trend. It’s an intuitive picture of the world, because it matches our deep, unstated assumption that the grown-up world is basically like high school. Everyone wants to copy the cool kids.

But is this really how trends work?

Duncan Watts doesn’t think so. He’s a network scientist at Columbia University — currently on leave at Yahoo — and he’s been doing a bunch of fascinating experiments that appear to debunk the idea of “influentials”. I’d been reading his white papers for a long time, so I was excited when Fast Company asked me to write a profile of him. It appears in the current issue, so you can grab a paper copy on the newsstands now; it’s also here on the Fast Company web site, and a copy is archived below! (It includes the excellent photography of Watts by Steven Pyke, a crop of which appears above.)

Is the Tipping Point Toast?

Marketers spend a billion dollars a year targeting influentials. Duncan Watts says they’re wasting their money.

by Clive Thompson

Don’t get Duncan Watts started on the Hush Puppies. “Oh, God,” he groans when the subject comes up. “Not them.” The Hush Puppies in question are the ones that kick off The Tipping Point, Malcolm Gladwell’s best-seller about how trends work. As Gladwell tells it, the fuzzy footwear was a dying brand by late 1994 — until a few New York hipsters brought it back from the brink. Other fashionistas followed suit, whereupon the cool kids copied them, the less-cool kids copied them, and so on, until, voila! Within two years, sales of Hush Puppies had exploded by a stunning 5,000%, without a penny spent on advertising. All because, as Gladwell puts it, a tiny number of superinfluential types (“Twenty? Fifty? One hundred — at the most?”) began wearing the shoes.

Very small children are brutally honest. Show them a picture you’ve painted, sing them a song you wrote: If they don’t like it, they have no problem telling you that you suck.

So when precisely does the ability to disguise one’s opinion emerge — to engage in what psychologists call “ingratiation behaviort”, and lie and say that you’re impressed when you’re not? I was surprised to learn that researchers haven’t looked too closely at the beginnings of flattery. Apparently the first studies have recently been done by KangLee at the Ontario Institute for Studies in Education. As the University of Toronto Magazine reports:

They asked a group of preschool children ages 3 to 6 to rate drawings by children and adults they knew, as well as strangers. The preschoolers judged the artwork both when the artist was present, and when he or she was absent. The three-year-olds were completely honest, and remained consistent in their ratings; it didn’t matter who drew it, or whether the person was in the room. Five- and six-year-olds gave more flattering ratings when the artist was in front of them. They flattered both strangers and those they knew (although familiar people got a higher dose of praise). Among the four-year-olds, half the group displayed flattery while the other half did not. This supports the idea that age four is a key transitional period in children’s social understanding of the world.

There are, Lee says, two reasons to flatter: Either to reward someone’s behavior, or to butter them up in case you need them to be nice to you later on. Lee’s not sure which strategy the four-year-olds are pursuing. But because they flattered strangers as well as people they knew suggests “they are thinking ahead, they are making these little social investments for future benefits.”

Here’s some lovely news: The University of Michigan Press has asked me to edit the 2008 edition of their now-annual anthology, the Best of Technology Writing.

It’s a really terrific series. I’m biased in saying so, since one of my stories appeared in last year’s anthology, heh. But it’s a really superb collection of the most thought-provoking and well-crafted tech around.

So, to figure out what stories ought to go in the upcoming collection, we need your help. If you go to the publisher’s web site, you can nominate any 2007 tech story you like! The specific rules, as they note, are:

The competition is open to any and every technology topic—biotech, information technology, gadgetry, tech policy, Silicon Valley, and software engineering are all fair game. But the ideal candidates will:

- be engagingly written for a mass audience;

- be no longer than 5,000 words;

- have been published between January and December, 2007

The deadline is fast approaching — nominations need to be in by January 31, 2008! And you can nominate as many pieces as you like.

Here’s a fascinating fact: Out of the 10 bestselling books in Japan last year, five were “cellphone novels” — books that were written on the mobile phone, with the authors tapping out sentence by sentence via text message.

Apparently the rise of the cell-phone novel has caused enormous consternation over there, because the style of this new genre so radically violates traditional Japanese storytelling craft. Historically, the prose in Japanese novels was ornate, with long, lavish descriptions of locations. But because these new novels are written on technology that doesn’t allow for quick, fluid writing, cellphone novels tend to consist of prose more reminiscent of Hemingway or Pinter — short, snipped sentences, with much of the book occupied by terse dialogue.

I knew almost nothing of this trend, until I read a superb recent article by Norimitsu Onishi in the New York Times. You really gotta go read this story: Virtually every paragraph describes some weird new collision of culture, society, literature and technology.

For example, three of the top-10 bestselling novels were written by first-timers. Why? Because, as Japanese experts note, of the omnipresence of phones. We think of text-messaging merely as a medium for intrapersonal communication. But if you think of the phone as a new type of word processor, then a different picture emerges. The reason all these young people are writing novels is that they’ve discovered, quite by accident, that they’re carrying typewriters around in their pockets. These authors aren’t using their phones to text other people; they’re texting themselves. “It’s not that they had a desire to write and that the cellphone happened to be there,” said Chiaki Ishihara, an expert in Japanese literature at Waseda University who has studied cellphone novels. “Instead, in the course of exchanging e-mail, this tool called the cellphone instilled in them a desire to write.” Indeed, many of the authors are teenagers who pecked out their novels during snippets of downtime at school.

Beyond awesome. Even more interesting is Onishi’s exploration of the stylistic implications of thumb-written novels. Younger readers love them; older ones don’t, because they prefer the flowing descriptive prose of traditional Japanese fiction. Some literary critics complain that because the prose is so dialogue-heavy, cell-phone novels ought more properly to be classified as comic books. (A niggling distinction, you’d think, except that literary prizes and bestseller lists hinge upon these taxonomies: After the Harry Potter books sold so well that they colonized the bestseller lists in the US, many newspapers created “children’s bestseller” lists specifically so that “serious” fiction wouldn’t get drowned out.)

Now dig this: The cell-phone novel now has its own recognizable style, obviously. But because 12-button keypads are pretty difficult interfaces upon which to compose book-length prose, many Japanese authors have begun writing cell-phone novels on typewriters: I.e. novels written merely in the style of 12-button composition. As Onishi notes …

… an existential question has arisen: can a work be called a cellphone novel if it is not composed on a cellphone, but on a computer or, inconceivably, in longhand?

“When a work is written on a computer, the nuance of the number of lines is different, and the rhythm is different from writing on a cellphone,” said Keiko Kanematsu, an editor at Goma Books, a publisher of cellphone novels. “Some hard-core fans wouldn’t consider that a cellphone novel.”

Our tools, of course, affect our literary output. And all this made me wonder how other writing tools affect what’s written. I use Movable Type to write my blog, and I’m constantly annoyed by how small the text-entry boxes are. Whenever I write an entry, the text quickly flows down several box-lengths, which can make it hard to keep track of my argument. The problem, of course, is that the tool was designed with the idea that people would be writing extremely short, pithy entries … whereas my entries tend to drag on and on and on. It reminds me of the writing on one of those old, proprietary-hardware word-processors from the 80s, which were outfitted with screens that only let you see seven lines at a time.

Virginia Heffernan wrote a neat piece a few weeks ago comparing the cognitive and emotional effects of several different types of word processors: She argued that the unadorned, uncluttered, blank-page aesthetic of Scrivener — an alt.word.processor — produced a “clean and focused mind,” in contrast to distractions of Microsoft Word, with its “prim rulers” and “officious yardsticks”.

So what would my prose be like if I wrote on my phone keypad?

(Photo above by Edward B., courtesy his Creative Commons Attribution Share-Alike license!)

In the current issue of Wired magazine is my latest column — and this one’s about why I love speculative fiction: Because it’s the last remaining literature of big ideas! Check it out on the Wired site for free, and an archived copy is below.

Note to fanboys/fangirls/fanthings: I know I’m using the phrase “science fiction” imprecisely here. Technically, I’m talking about all forms of speculative writing — science fiction, fantasy, realist utopian/dystopian writing, science-fantasy, etc. But since most Wired readers probably aren’t familiar with these distinctions, nor with the term “speculative fiction” as a genus that contains many species, I used “science fiction” in its place … even though this usage is imprecise and basically inaccurate. (BTW, the graphic above is a crop of the nifty illustration accompanying the piece, by Rodrigo Corral.)

And away we go …

Take the Red Book

Why Sci-Fi Is the Last Bastion of Philosophical Writing

by Clive ThompsonRecently I read a novella that posed a really deep question: What would happen if physical property could be duplicated like an MP3 file? What if a poor society could prosper simply by making pirated copies of cars, clothes, or drugs that cure fatal illnesses?

The answer Cory Doctorow offers in his novella After the Siege is that you’d get a brutal war. The wealthy countries that invented the original objects would freak out, demand royalties from the developing ones, and, when they didn’t get them, invade. Told from the perspective of a young girl trying to survive in a poor country being bombed by well-off adversaries, After the Siege is an absolute delight, by turns horrifying, witty, and touching.

Technically, After the Siege is a work of science fiction. But as with so many sci-fi stories, it works on two levels, exploring real-world issues like the plight of African countries that can’t afford AIDS drugs. The upshot is that Doctorow’s fiction got me thinking — on a Lockean level — about the nature of international law, justice, and property.

One of the broken-record themes of my blog — and my video-games journalism — is how badly our culture understands the meaning of play and games. This is partly because the philosophy of play, ludology, isn’t taught at any level of school; it’s also been almost completely ignored by philosophers both ancient and modern. Small children love to dream up weird new games and think about new forms of play, but this is systematically drummed out of them when they go to school and are told that there are only seven or eight “serious” sports, like football and baseball and the like.

So I was delighted to open up this weekend’s “Week in Review” section of the New York Times and find that John Schwartz had written “The Joy of Silly” — a lovely, thoughtful piece on the culture of the wacky Wham-O toys of the 60s, created by the recently and sadly deceased Wham-O founder Richard Knerr. Here’s an excerpt:

Our toys, Dr. Tenner said, flow from the cycles of innovation and refinement that define all technologies. The playthings tend to be the byproducts of a new technology and a fertile imagination. So Silly Putty came from failed experiments in making artificial rubber, and the Slinky was a tension spring that a naval engineer saw potential in — and not just potential energy. The postwar period from 1945 to 1975 was especially rich in innovation, and thus toys, Dr. Tenner said.

But the cultural moment has to be right as well. “You can see pictures in Bruegel of kids running after a hoop and a stick,” he noted, but in the Hula Hoop the technology of cheap, plastic manufacturing dovetailed with a nation ready to shake its hips. The message of the Hula Hoop, and for that matter of Elvis Presley, he said, emerged in a time for many of intense optimism, which seemed to say: “You can let yourself go. You can dance wildly. You can swing wildly. You don’t have this dignity to preserve.”

Dr. Hall said one thing that defined the early Wham-O toys was that they were “a little transgressive,” and involved physical activity with a little naughtiness or risk.

There’s plenty more worth reading in this too-short piece! Schwartz also quotes Kay Redfield Jamison, a professor of psychiatry at the Johns Hopkins School of Medicine, who points out that toys of the Wham-O vintage were “so noneducational in that dreary, earnest, modern sense of ours.” This is a superb point: When I walk into toy stores — which I do a lot more frequently now that I have a two-year-old — I’m struck by how avidly the toy-makers are trying to peddle their wares based on their presumed educational value. Never mind the fact that these educational aspects are usually just corporate bumph (they’re almost never scientifically tested, for sure); the point is that the toy-makers know that parents desperately want the toys to be an early inflection point in their children’s parabolic punt into Yale or Harvard. Parents are terrified that if their kids play in an open-ended way, they’ll just — well — waste time.

Yet — as Schwartz comes close to saying outright, but doesn’t quite — one of the whole points behind play and games is to waste time. It’s not the sole point or even the chief point, but it’s a frequent one. One of the reasons I like playing video games is specifically to park my brain inside ringing, clattering box of physics for an hour or so, merely for the gorgeously idle pleasure of it. I do not intend it to be productive: I am choosing to waste time. Hell, I probably need to waste a certain percentage of every day simply to prevent myself from getting emotional rug-burn from all my other, frenetically Taylorist attempts to optimize every single waking minute. When I install a stupid, time-wasting game on my PDA phone, it’s partly to restore that device’s spiritual balance — to make sure that I use it to waste some time. Otherwise I’d just be using it to check email neurotically all day long, and precisely what kind of life is that?

Wasting time proudly has, I’ve decided, become a weirdly radical act.

(The picture above is by Marilynn K. Yee, and beautifully illustrated the Times piece … check it out in full here.)

One of the biggest puzzles of Islamic terrorism is why so many of its participants are engineers. Perhaps the most famous one is Mohammad Atta, the 9/11 mastermind (pictured above); but when you read news reports of suicide bombing incidents, you realize he’s not alone. It’s engineer after engineer after engineer.

Why? Because in the Middle East, the mindset of engineers mixes with religiosity — and a lack of professional opportunity — to produce a toxic, combustive psychology. That’s the conclusion of “Engineers of Jihad”, a paper by sociologists Diego Gambetta and Steffen Hertog of the University of Oxford — the link is directly to the full PDF.

Their research is incredibly interesting and thorough. To start off, they compiled a list of 404 members of violent Islamist groups, and found that engineers were, indeed, wildly overrepresented. Engineers make up only 3.5 per cent of the Islamic countries they studied, yet they made up 19 per cent of the group of terrorists — which means engineers are six times more likely to be terrorists than they ought to be.

Where things get really interesting is their exploration of engineering psychology — and the dynamics of engineers’ lives in the Islamic countries. First off, Gambetta and Hertog note that previous sociological studies have found that engineers are far more likely to be religious and conservative than other academics — four times more than social scientists and three times more so than people in the arts and humanities. What’s more, this religiosity affects their careers. A study by the Carnegie Foundation found that the more religious and conservative an engineer is, the less likely she or he is to be regularly publishing work — and thus less likely to be employed as an engineer.

To make matters worse, as the academics point out, most of the Islamic countries in which these engineers become radicalized have crappy economies: When the engineers return home from their training in the West, they can’t find good jobs. In Egypt, for example, “Many graduates preferred joblessness even to relatively well-paying menial jobs, and for numerous young Egyptians marriage became unaffordable. Making a virtue out of necessity, many graduates tried to restore their dignity by declaring their adherence to antimaterialist Islamic morality.”

On top of this is what Gambetta and Hertog call the “engineering mentality”. To quote them at some length:

Friedrich von Hayek, in 1952, made a strong case for the peculiarity of the engineering mentality, which in his view is the result of an education which does not train them to understand individuals and their world as the outcome of a social process in which spontaneous behaviours and interactions play a significant part. Rather, it fosters on them a script in which a strict ‘rational’ control of processes plays the key role: this would make them on the one hand less adept at dealing with the confusing causality of the social and political realms and the compromise and circumspection that these entail, and on the other hand inclined to think that societies should operate orderly akin to well-functioning machines — a feature which is reminiscent of the Islamist engineers …

The upshot? The creation of a class of young men who are highly educated, conservative, highly religious, economically thwarted, pissed off at both their own countries and the West, unable or unwilling to examine the complex social and political reasons for their personal troubles, and seeking a straightforward “answer”. “It appears that engineers … found themselves perfectly and painfully placed at a high-voltage point of intersection in which high ambitions and high frustration collided,” Gambetta and Hertog conclude.

It’s a really interesting analysis! Still, I was surprised to read some of their data and assumptions. For example, engineers being overall more conservative? That shocked me: I hang out with tons of computer and software engineers, and if anything they tend to be either left-wing or libertarian. (Or, as the Jargon File would have it, just generally suspicious of authority.) But possibly computer engineers, because they deal with the flow of information — a discipline that necessarily bumps you up intellectually against notions of freedom, secrecy, customization, personal choice, etc. — wind up in different emotional and psychological place than engineers who work more straightforwardly with physics. And one could easily dispute other parts of Gambetta and Hertog’s argument; Hayek’s analysis of engineering psychology has been disputed.

Still, this is one of the most ambitious attempts to tackle the puzzle of engineer terrorists I’ve yet seen.

(Thanks to the Atlantic Monthly for finding this one!)

This is fascinating: Apparently geologists have spent decades assuming that the shapes of Mid-Atlantic-state rivers were natural — when they’re actually man-made.

Basically, one of the problems with studying rivers in the US is that so many have been warped by commercial and residential development that it’s hard for us to know what the stream ought to look like, naturally. The closest to “natural” that the geologists could identify were rivers of the mid-Atlantic states — which move in ribbon-like channels through silty banks. They assumed, for decades, that this ribbon-like shape was the Platonic solid.

But it turns out that those ribbon-straight rivers were in fact affected by human development, as two scientists — Robert C. Walter and Dorothy J. Merritts — report in Science today. As the New York Times reports:

In a telephone interview, Dr. Merritts described a typical scenario. Settlers build a dam across a valley to power a grist mill, and a pond forms behind the dam, inundating the original valley wetland. Meanwhile, the settlers clear hillsides for farming, sending vast quantities of eroded silt washing into the pond.

Years go by. The valley bottom fills with sediment trapped behind the dam. By 1900 or so the dam is long out of use and eventually fails. Water begins to flow freely through the valley again. But now, instead of reverting to branching channels moving over and through extensive valley wetlands, the stream cuts a sharp path through accumulated sediment. This is the kind of stream that earlier researchers thought was natural.

“This early work was excellent,” Dr. Merritts said, “but it was done unknowingly in breached millponds.”

(Image above by Fhantazm, via his Creative Commons Attribution-NonCommercial-ShareAlike license!)

Dig it: Companies run by CEOs with attractive faces tend to have higher profits, according to a pretty hilarious new study appearing in next month’s issue of Psychological Science. Psychologists Nicholas Rule and Nalini Ambady took a bunch of pictures of CEOs, put them into grayscale and standardized them in size, and then showed them to college students. The students were given no other information about the CEOs — they weren’t even told which companies they ran. With nothing other than the picture to go on, the students were asked to rate the CEOs according to their apparent “competence, dominance, likeability, facial maturity and trustworthiness.”

The upshot? CEOs who scored high on the Am-I-Hot-Or-Not ratings turned out to be piloting the most profitable companies. As a press release notes:

“These findings suggest that naive judgments may provide more accurate assessments of individuals than well-informed judgments can,” wrote the authors. “Our results are particularly striking given the uniformity of the CEOs’ appearances.” The majority of CEOs, who were selected according to their Fortune 1000 ranking, were Caucasian males of similar age.

We could, of course, regard this as a sterling example of “Science Confirms The Obvious.” I mean, in modern America, is this news? That attractive, confident-looking white dudes are where it’s at? And this comes on the heels of dozens of recent studies of hot-ology, which have demonstrated time and time again that tall, willowy, cocksure white folks are cleaning everyone else’s clocks. It also fits neatly into Malcolm Gladwell’s thesis in Blink — i.e. that first impressions are of enormous importance.

Nonetheless, we are, as the scientists note, left with the chicken-and-egg question: “which came first, the powerful-looking CEO or their successful career?”

As a reader, my favorite blog is Boing Boing. But as a blogger myself, Boing Boing is often a bit of a hassle to blog around, because my policy is to virtually never post about anything that has already been seen on Boing Boing. My theory is a) that I prefer to introduce my readers to things they haven’t heard of yet, and that b) most of them are already reading Boing Boing, so c) I won’t bother covering anything Boing Boing has already posted about. (The exception is if something posted on Boing Boing makes me think of an original analysis — i.e. a new point about something.)

As you’d expect, this policy dices me out of many juicy postings because Boing Boing is incredibly fast. A friends or readers will send me a link to something cool, but when I’m swamped with work it often takes me a day or more to post it — during which time Boing Boing will, almost without fail, beat me to the punch. Ah well.

But precisely how good — and how fast — is Boing Boing? Is it just that I’m lazy and slow, or does Boing Boing beat most other blogs too in discovering links? The blogger Simon Owens recently wondered about this, so he decided to run some data and check it out. He recorded every link that Boing Boing posted on a particular day, and then removed any postings that were self-promotional, because they have an unfair advantage in breaking news about themselves. That left 16 postings of links to other web sites. Owens used blog-search engines to see which other blogs had posted about those links — i.e. whether any other blogs had scooped Boing Boing.

In the end, there was a grand total of 112 blogs that had scooped Boing Boing for this 24-hour period. Divided by 16, that means that an average of 7 blogs scoop Boing Boing for every post. But this is a slightly misleading figure, because of the 16 links that day, Boing Boing was the first to post 8 of them. That means that for 50% of the links that Boing Boing posts, it was the first blog to find them.

I also noticed that the later in the day the links were posted, the more likely that other blogs had managed to scoop Boing Boing. This indicates that many of the links posted on Boing Boing are to URLs that were created within a 24-hour time span.

So what does this mean? Was my theory correct?

Well, in this particular instance: Yes. Boing Boing was consistently among the first blogs in the blogosphere to discover a link of interest and then post it.

Pretty cool stuff.

We are, by now, accustomed to talking about “the genetic code.” But we rarely think about what that metaphor means.

So I was delighted to stumble across this fun essay: “DNA as seen through the eyes of a coder.” Bert Hubert, a programmer, compared DNA to computer code and finds a number of startling similarities. For example: DNA is highly “commented,” just like good computer code; indeed, junk DNA can be thought of as code that is “commented out” — i.e. old code left over from previous revisions that is no longer used, and surrounded by comments telling the processor to ignore it. DNA also exhibits “bug regression” — new, unexpected bugs that are caused when a programmer tries to fix an existing bug. (Mutations that emerged in Africans to create immunities to malaria, for example, accidentally made the hosts susceptible to sickle-cell anemia.)

But this is my favorite part of the essay:

Somebody recently proposed in a discussion that it would be really cool to hack the genome and compromise it so as to insert code that would copy itself to other genomes, using the host-body as its vehicle. ‘Just like the nimda worm!’

He shortly thereafter realised that this is exactly what biological viruses have been doing for millions of years. And they are exceedingly good at it.

A lot of these viruses have become a fixed part of our genome and hitch a ride with all of us. To do so, they have to hide from the virus scanner which tries to detect foreign code and prevent it from getting into the DNA.

The metaphor, of course, works both ways. Just as the mechanics of DNA are a useful metaphor to help understand how computer viruses work, the mechanics of computer programming are a useful metaphor to help understand how DNA works.

(Thanks to Justin Blanton for this one!)

This is probably one of the most controversial video-game columns I’ve ever written. Back in November — I’m coming late to this, of course, because of my two-month blogging drought — I wrote a piece for Wired News about how playing Halo 3 online had given me a glimpse into the strategic use of suicide bombing.

As you might imagine, I got a ton of email and blog-comment about it. Interestingly, only a very few accused me trivializing real-world suicide bombing — which is nice, because I certainly didn’t want to — and the great many “got” my point. But, hilariously, a significant chunk of people, including most of the thread following my post at Wired News, chimed in to berate me for sucking at Halo, and/or to offer me tips on improving my play. Heh.

At any rate, this’ll all make more sense after you read the piece. It’s online free at Wired News, and a copy is permanently archived below!

Suicide Bombing Makes Sick Sense in Halo 3

by Clive ThompsonI used to find it hard to fully imagine the mind-set of a terrorist.

That is, until I played Halo 3 online, where I found myself adopting — with great success — terrorist tactics. Including a form of suicide bombing.

This probably bears some explanation. I’ll begin by pointing out a basic fact: A lot of teenage kids out there play dozens of hours of multiplayer Halo a week. They thus become insanely good at the game: They can kill me with a single head shot from halfway across a map — or expertly circle me while jumping around, making it impossible for me to land a shot, while they pulverize me with bullets.

Here’s a lovely sign of the times: College basketball coaches have discovered that the only way to forge an emotional bond with teenage athletes you’re trying to recruit is to send them text messages.

Indeed, apparently coaches now inundate star high-school basketball players with so many SMS messages that the NCAA has recently banned Division I colleges from using text messaging in recruiting. The texting was so volumous it was causing serious charges on the kids’ monthly bills.

There’s a New York Times story today tha gorgeously unpacks the social revolution afoot. Here’s a taste:

“What kind of relationship can you build in 160 characters?” asked Kerry Kenny, the incoming chair of the N.C.A.A.’s Division I Student Athlete Advisory Committee, referring to the maximum length of a text message.

Many college coaches say text messaging is an effective way to build a casual relationship with potential recruits.

“Sometimes kids don’t want to talk on the phone,” said Pat Skerry, an assistant men’s basketball coach at Rhode Island. “They don’t give you much.”

Skerry and other basketball coaches are allowed to call seniors twice a week. In most other sports, phone calls to seniors are limited to one a week, although coaches can also send e-mail messages and faxes. Before the ban went into effect, “I’d just sit on the couch late at night, just kind of flicking away, while the TV was on,” Skerry said. “It’s a good way to stay up with 40, 50 kids almost daily.”

I love it. The teenagers do not actually regard the phone as something to be talked on. I have to say, I’m coming around to the same point of view. I’m surprised how often, when I’m in the middle of a business converation, that I wish the exchange were happening in text — so I could quickly skim the content of a conversation, and skip past the throat-clearing pleasantries. This is particularly true of PR folks who call me to pitch their products or companies for coverage. I’m happy to hear about all and any pitches, but man alive, it can be horribly tedious to slog through it on the phone. Ditto for voice mail. The slow, ponderous nature of voice mail — and the fact that you can’t cut and paste information in it — has made me almost consider a total ban on it. I’m thinking of simply leaving a message saying hi, I’m not at my phone right now, and I don’t take voice mail — please email me at clive@clivethompson.net. Literally the only person on the planet I personally know who doesn’t use email — or computers, for that matter — is my mother. And I always try to answer the phone when I see her calling!

Anyway, the point is, I quite understand why the student athletes prefer texting. Could you imagine the nightmare of trying to hack through dozens of voice mails every day from pleading coaches?

(The picture above is by Nesster, courtesy his Creative Commons Attribution Share-Alike license!)

While egosurfing for responses to my blogging via Technorati, I ran across Matt Corwine’s excellent response to my posting of a few days ago, in which I declared that “audiophiles are jackasses.” As Matt writes:

But here’s something different: I wonder whether he’s being fair to the extreme audiophiles by assuming that in addition to being really into speakers, they’re also into music. Perhaps audiophilia and musicophilia are two different things that are sometimes, but not always, present in the same brain.

So there’s music and then there’s sound. A lot of people like both, but maybe some who like sound don’t much care for music — they might be happy just listening to test tones or Boston records or whatever, as long as it sounds great on their system.

I’m probably 5dB short of being an audiophile. Before I bought my first record, I was really into listening to the vacuum cleaner. Today, I can sometimes get into hearing awesomely produced music on a high-end system that costs more than my house, but I think the part of my brain that gets off on such things is separate from the part that actually likes music. In the same way that I enjoy making sushi for entirely different reasons than I enjoy eating it.

It’s a great point. It reminded me of a similar phenomenon: Guitar-collecting freaks who do not actually record or perform with their gear, but who merely enjoy having 50 different guitars around so they can occasionally play a chord or two and re-experience the timbre that makes each unique.

What’s the best music to exercise to? Scientists and laypeople alike have known that music affects everything from your mood to your co-ordination. But apparently one psychologist has attempted to quantify the effect of music on your workout: Costas Karageorghis, an associate professor of sport psychology at Brunel University in England. Ten years ago, he invented the Brunel Music Rating Inventory, which ranks songs based on four criteria. According to a story in today’s New York Times …

… one of the most important elements, Dr. Karageorghis found, is a song’s tempo, which should be between 120 and 140 beats-per-minute, or B.P.M. That pace coincides with the range of most commercial dance music, and many rock songs are near that range, which leads people to develop “an aesthetic appreciation for that tempo,” he said. It also roughly corresponds to the average person’s heart rate during a routine workout — say, 20 minutes on an elliptical trainer by a person who is more casual exerciser than fitness warrior.

Dr. Karageorghis said “Push It” by Salt-N-Pepa and “Drop It Like It’s Hot” by Snoop Dogg are around that range, as is the dance remix of “Umbrella” by Rihanna (so maybe the pop star was onto something). For a high-intensity workout like a hard run, he suggested Glenn Frey’s “The Heat Is On.” [snip]

In other words, the best workout songs have both a high B.P.M. count and a rhythm to which you can coordinate your movements. This would seem to eliminate any music with abrupt changes in time signature, like free-form jazz or hard-core punk, as well as music that varies widely in intensity, like much of indie rock.

I love it: Don’t exercise to indie rock! It’s too whiny! And do not even think of working out to emo. That stuff’ll reverse your metabolic rate.

Oddly, this whole debate reminds me of Sasha Frere-Jones’ critique of indie rock in the New Yorker — “A Paler Shade of White” — in which he argued that indie rock (such as Wilco, pictured above) has systematically stripped out any influences from the R&B roots of American rock ‘n roll proper, and has thus become, among other things, singularly undanceable. “In the past few years, I’ve spent too many evenings at indie concerts waiting in vain for vigor, for rhythm, for a musical effect that could justify all the preciousness,” he wrote. “How did rhythm come to be discounted in an art form that was born as a celebration of rhythm’s possibilities?” As you’d imagine, there was a tsunami of outcry to — and praise for — Frere-Jones’ piece. Those who agreed with him decried what they saw as the unrhythmic plodding-ness of indie rock; those who disagreed pointed out plenty of bouncy counterexamples, and questioned Frere-Jones’ whole identification of whiteness with a lack of synchopation.

But it strikes me that we could resolve the question by gathering some highly relevant data: The playlists on MP3 players at the local gym! If we presume that exercise goes best to rhythmic music, and furthermore that few gym-goers would actively seek to undercut their workout with nonrhythmic music, then we’ve got a nice built-in control for the inherent subjectivity of music appreciation. If indie rock is rhythmic, people will exercise to it; if it isn’t, they won’t.

Anyone out there looking for a fun sports-psychology MA or PhD thesis?

A couple of weeks ago, the New York Times Magazine published its 2007 “Year in Ideas” issue — their annual compendium of the year’s most interesting and offbeat research. I wrote up five of their scientific and technological entries. The entire issue is online here for free, but I’m also archiving my pieces here for posterity’s sake.

This is one really fun — it’s about the “Gomboc”, the world’s first self-righting object: An object with only one stable and one unstable point of balance. You can see a video of the Gomboc in action here!

The self-righting object

The Gomboc is a roundish piece of clear synthetic material with gently peaked, organic curves. It looks like a piece of modern art. But if you tip it over, something unusual happens: it rights itself.

It leans off to one side, rocks to and fro as if gathering strength and then, presto, tips itself back into a “standing” position as if by magic. It doesn’t have a hidden counterweight inside that helps it perform this trick, like an inflatable punching-bag doll that uses ballast to bob upright after you whack it. No, the Gomboc is something new: the world’s first self-righting object.

A couple of weeks ago, the New York Times Magazine published its 2007 “Year in Ideas” issue — their annual compendium of the year’s most interesting and offbeat research. I wrote up five of their scientific and technological entries. The entire issue is online here for free, but I’m also archiving my pieces here for posterity’s sake.

This one is about a pair of scientists that investigated the mystery of how laptop cords get tangled up so quickly when you leave them loose in your bag. Heh.

Knot Physics

When Doug Smith pulls the power cord for his laptop out of his bag, he inevitably finds that — whoops! — it has somehow tangled itself into a dense knot. This is, of course, a common complaint of the high-tech age (and before, with other types of cord). Most of us simply shrug. But Smith is a physics professor at the University of California, San Diego, and he wanted to know precisely why the knots form in the first place.

So he devised a clever experiment. Working with his research assistant Dorian Raymer, he took some string — about the thickness of a computer-mouse cord — and dropped it into a small square plastic box. They spun the box around for 10 seconds, then opened it up. Sure enough, they found “this really monster, complex knot,” Smith says. Then they repeated the experiment a dizzying 3,415 more times, using strings of different lengths and boxes of larger sizes, to see whether there were any rules that governed how badly the string knotted.

A couple of weeks ago, the New York Times Magazine published its 2007 “Year in Ideas” issue — their annual compendium of the year’s most interesting and offbeat research. I wrote up five of their scientific and technological entries. The entire issue is online here for free, but I’m also archiving my pieces here for posterity’s sake.

This one is about “the death of checkers” — the story of the scientist who solved the game. Reinhard Hunger did the awesome illustration that is thumbnailed above; check out the full-size version on the Times’ site!

The Death of Checkers

Checkers has been around for more than 400 years, has been enjoyed by billions of players and has taught generations of young children the joy of strategy.

And now it’s all over. This July, Jonathan Schaeffer, a computer scientist at the University of Alberta in Canada, announced that after running a computer program almost nonstop for 18 years, he had calculated the result of every possible endgame that could be played, all 39 trillion of them. He also revealed a sober fact about the game: checkers is a draw. As with tic-tac-toe, if both players never make a mistake, every match will end in a deadlock.

A couple of weeks ago, the New York Times Magazine published its 2007 “Year in Ideas” issue — their annual compendium of the year’s most interesting and offbeat research. I wrote up five of their scientific and technological entries. The entire issue is online here for free, but I’m also archiving my pieces here for posterity’s sake.

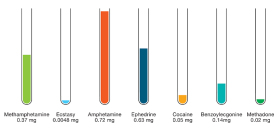

This one’s about “community urinanalysis”! The illustration, by Cybu Richli, accompanied it.

Community Urinalysis

Everyone knows how a drug test works: You urinate into a cup and your employer (or prospective employer) has the sample tested to see if you’ve been using any illegal substances. This year, though, Jennifer Field, an environmental chemist at Oregon State University, experimented with an unusual variation on this process. She found out what illicit drugs the population of an entire city was ingesting.

How? By collecting and then testing water from the city’s sewage-treatment plant. Since all drug users urinate, and since the urine eventually winds up in the sewers, Field and her fellow researchers figured that sewer water would contain traces of whatever drugs the citizens were using.

Sure enough, when Field’s team tested a mere teaspoonful of water from a sewage plant — which it ultimately did in many American cities — the sample revealed the presence of 11 different drugs, including cocaine and methamphetamine.

A couple of weeks ago, the New York Times Magazine published its 2007 “Year in Ideas” issue — their annual compendium of the year’s most interesting and offbeat research. I wrote up five of their scientific and technological entries. The entire issue is online here for free, but I’m also archiving my pieces here for posterity’s sake.

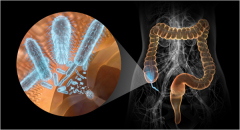

This one’s about a new theory for why the appendix exists! That lovely image above, by Bryan Christie Design, accompanied it.

The Appendix Rationale

For years, the appendix got no respect. Doctors regarded it as nothing but a source of trouble: It didn’t seem to do anything, and it sometimes got infected and required an emergency removal. Plus, nobody ever suffered from not having an appendix. So human biologists assumed that the tiny, worm-shaped organ is vestigial — a shrunken remainder of some organ our ancestors required. In a word: Useless.

Now that old theory has been upended. In a December issue of The Journal of Theoretical Biology, a group of scientists announce they have solved the riddle of the appendix. The organ, they claim, is in reality a ”safe house” for healthful bacteria — the stuff that makes our digestive system function. When our gut is ravaged by diseases like diarrhea and dysentery, the appendix quietly goes to work repopulating the gut with beneficial bacteria.

There’s a fascinating piece in today’s New York Times about a new legal fight: Should border guards be able to search through the contents of your laptop when you’re entering the US? Apparently this question is being decided, as we speak, by several federal courts. The administration argues that yes, it should be allowed to look through your hard drive, partly for practical reasons — for example, they’ve discovered people with child pornography crossing the border — and for legal reasons: A search through a hard drive is no different than searching through one’s paper records in a briefcase. Most federal courts have agreed with this reasoning.

But one judge — Dean D. Pregerson of Federal District Court in Los Angeles — recently disagreed, and barred the results of an airport laptop search. Why? Because, as the story notes:

“Electronic storage devices function as an extension of our own memory,” Judge Pregerson wrote, in explaining why the government should not be allowed to inspect them without cause. “They are capable of storing our thoughts, ranging from the most whimsical to the most profound.”

This is incredibly fascinating stuff. It’s also going to become more and more crucial, because — as I’ve noted in a recent Wired column, and my profile last year of Gordon Bell, the guy who’s outsourcing all his memory to a terabyte hard drive — we’re offloading more and more of our grey matter to our silicon matter. Pregerson is precisely right. In an era where the line between our artificial memory and our real one is becoming increasingly blurry, searching through a hard drive is going to be more and more like reading your mind.

Here’s an easy prediction: Anyone who’s worried about memory-privacy at the border will start storing most of their silicon thoughts online, where border guards won’t have access to it. Of course, leaving all your stuff on Google Drive has its own problems; it’s another easy place for the government to subpoena. So there’ll be other solutions, probably, including steganographic memory storage — hiding documents inside other documents — and new forms of crypto. Either way, interesting times ahead, eh?

About a year ago, I blogged about the “loudness wars” in music: How the overuse of compression is killing the dynamic range of albums these days. Compression, to recap, is the technique of reducing the acoustic difference between the quietest and loudest parts of a song. In the old, old days of the 70s, most rock albums had very quiet and very loud parts — they weren’t very compressed. But beginning in the 90s, record producers began compressing the heck out of recordings, because when the quieter parts aren’t significantly quieter than the louder parts, the overall song sounds “louder”: It blasts out of the speakers with a more commanding, electric presence.

Alas, it also sounds more monotonous, and psychoacousticians have long argued that highly compressed music leads to “ear fatigue”. So the upshot is today’s music sounds less and less distinctive, with performances that have less and less nuance. It’s gotten so bad that even the music industry is getting worried that they’re ruining music. According to a great piece on this subject in the latest issue of Rolling Stone, a panel at last year’s South by Southwest music conference — entitled “Why Does Today’s Music Sound Like Shit?” — was focused almost exclusively on the problem of overcompression. The producers suggested that it’s time to start recording music with far less compression, so that the true sonic variety of a song can be re-experienced.

Fair enough. But here’s the really interesting thing: The story goes on to point out that it may simply be too late:

But even most CD listeners have lost interest in high-end stereos as surround-sound home theater systems have become more popular, and superior-quality disc formats like DVD-Audio and SACD flopped. Bendeth and other producers worry that young listeners have grown so used to dynamically compressed music and the thin sound of MP3s that the battle has already been lost. “CDs sound better, but no one’s buying them,” he says. “The age of the audiophile is over.”

When I read that final line, I was, I have to confesss, struck by a powerful — if snotty — thought: Thank god the age of audiophiles is over. Speaking as someone who loves music, who has actually played and recorded pop music for 20 years, and who still plays six different instruments, I think music is crucial to the human spirit.

But audiophiles? Audiophiles are jackasses. You know who I’m talking about: The guys — and they’re almost always guys — who own $54,000 stereo systems and have their entire apartments dominated by thousands of vinyl albums of rare imports that are boring beyond description but which they force you to listen to, when you make the ghastly mistake of actually visiting their sonic sanctuaries.

I think what annoys me about audiophiles — and perhaps what has begun to annoy me, ever so slightly, about the handwringing over “the loudness wars” — is that they posit a way-too-fussy, sancitmonious attitude towards how one ought to listen to pop music. Because when it comes to pop music, are ultra-high-precision sound systems really so necessary, or even desirable? After all, pop music originally came to life in the 50s and 60s on horrifically tinny AM radios. Indeed, the playback devices were so crude that producers had to mix the stuff specifically to take account for the jurassic properties of the godawful speakers. (One of main reasons Phil Spector invented the “Wall of Sound” was that it gave a relatively fat sound when played on jukebox-primitive sound systems.)

In fact, I’ve come to believe that crappy technology — lousy studios, horrible playback devices — is a boon to pop music. Because when you strip out the superhigh and superlow frequencies that send audiophiles — planted with geometric triangulation betwixt their $325,000 Acapella speakers (pictured above!) — into such supposedly quivering raptures, you’re forced to reckon with a music simpler question, which is: Is the song any good? A really terrific pop song can survive almost any acoustic mangling and still be delightful. A mediocre one can’t. A mediocre song needs a doubleplusgood sound-system to bring out its half-baked appeal; a truly excellent tune is catchy even when played on a kazoo. For years, I have listened to all of my music either via a) a pair of $25 Harmon Kardon speakers attached to my computer, or b) an MP3 player of dubious provenance, outfitted with earbuds that I buy, well, at whatever electronics store I happen to be nearest when the old ones break down, and with whatever spare change I have in my pockets — and I do not think my soul is any the worse for wear.

Granted, maybe I’d think differently if the main thing I was listening to were classic music or opera, instead of pop music. But pop music is supposed to be a disposable, gritty little lo-fi affair.

The audiophile is dead. Long live the audiophile!

(Thanks to Boing Boing for this one!)

That spirograph-like doodle above? It’s a small chunk of this much larger diagram — and, if you believe a radical new theory, it might finally explain the fabric of the universe.

It’s also got a lovely backstory. It begins with one Garrett Lisi, a 39-year-old physicist who is affiliated with no university. Indeed, he’s kind of broke, because he spends most of the year surfing and snowboarding. But anyway, a while ago Lisi got intrigued by the properties of E8, a complex — discovered in 1887 — that encapsulates the symmetries of a 57-dimensional object, and which itself possesses 248 dimensions. Lisi had been thinking about the failures of the “Standard Model” of physics, which has never been able to explain how gravity works. (String theory has been the only rival model that offers an explanation of gravity.) And he was thinking about the vast and baffling array of fundamental particles that make up the universe — and the many particles predicted by string theory, but not yet observed in real-world experiments

Then Lisi had a breakthrough. He realized that the shape of E8 could be used as a sort of map, to explain the particles and their relationships. As a story in the Telegraph reports:

“My brain exploded with the implications and the beauty of the thing,” he tells New Scientist. “I thought: ‘Holy crap, that’s it!’”

What Lisi had realised was that he could find a way to place the various elementary particles and forces on E8’s 248 points. What remained was 20 gaps which he filled with notional particles, for example those that some physicists predict to be associated with gravity.

Physicists have long puzzled over why elementary particles appear to belong to families, but this arises naturally from the geometry of E8, he says. So far, all the interactions predicted by the complex geometrical relationships inside E8 match with observations in the real world. “How cool is that?” he says.

Lisi wrote up his idea in a paper — “An Exceptionally Simple Theory of Everything” — that he published online last November. Interestingly, since his schema predicts the existence of particles we haven’t yet seen, it will, as he points out, “either succeed or fail spectacularly”. This is nice, since of course one of the big problems afflicting fringe, mail-order doctorate physicists who offer Unified Theories of Everything is that they rarely posit how their concepts of harmonic convergence can ever be proven or disproven. (And believe me, after years of science journalism, I know this the hard way, because I’ve been snailmailed copies of self-published theories that are riddled with simply Rosicrucian weirdness.) It’s even more impressive given that the mythopoeic dimensions of Lisi’s life — a new theory of everything! developed by a surfer! based on a design that wouldn’t be out of place on your pothead cousin’s van! — would seem to fall straight into tinfoil-hat territory.

Nonetheless, despite the promise of testability, Lisi’s ideas have provoked a minor food-fight in the lunchroom of theoretical physics. The Wikipedia page for “An Exceptionally Simple Theory of Everything” has a pretty good summary of it. It’s been called everything from “beautiful stuff” to “childish misunderstandings.” Alas, grasshopper, I lack the math fu and physics fu to even begin to figure out whether Lisi is on to anything. But now I want a poster of E8 for my wall! Dude.

(Thanks to Julian Harley for this one!)

Tomorrow, the New York Times Magazine is publishing an article I wrote about touchscreen voting machines — and why they’re increasingly scaring the heck out of elections officials across the country. Essentially, the machines have garnered so many reports of weird and mysterious behavior that many now worry we’re in danger of repeating the 2000 presidential electoral fiasco: A razor-thin presidential race that goes into recount, provoking arguments over the performance of the voting technology so heated that they wind up in the Supreme Court.

The piece is already online at the Times Magazine’s web site, and it’s accompanied by really spectacular photography of the machines by Alejandra Laviada (a tiny sample is above), so check it out there! I’ve also put a copy below for permanent archive.

Can You Count On These Machines?

After the 2000 election, counties around the country rushed to buy new computerized voting machines. But it turns out that these machines may cause problems worse than hanging chads. Is America ready for another contested election?

by Clive Thompson

Jane Platten gestured, bleary-eyed, into the secure room filled with voting machines. It was 3 a.m. on Nov. 7, and she had been working for 22 hours straight. “I guess we’ve seen how technology can affect an election,” she said. The electronic voting machines in Cleveland were causing trouble again.

For a while, it had looked as if things would go smoothly for the Board of Elections office in Cuyahoga County, Ohio. About 200,000 voters had trooped out on the first Tuesday in November for the lightly attended local elections, tapping their choices onto the county’s 5,729 touch-screen voting machines. The elections staff had collected electronic copies of the votes on memory cards and taken them to the main office, where dozens of workers inside a secure, glass-encased room fed them into the “GEMS server,” a gleaming silver Dell desktop computer that tallies the votes.

Then at 10 p.m., the server suddenly froze up and stopped counting votes. Cuyahoga County technicians clustered around the computer, debating what to do. A young, business-suited employee from Diebold — the company that makes the voting machines used in Cuyahoga — peered into the screen and pecked at the keyboard. No one could figure out what was wrong. So, like anyone faced with a misbehaving computer, they simply turned it off and on again. Voilà: It started working — until an hour later, when it crashed a second time. Again, they rebooted. By the wee hours, the server mystery still hadn’t been solved.

Every once in a while, you run across celebrity profiles that attempt to demonstrate that not all celebrities are as dumb as fenceposts. You’ll read about the fact that, for example, Brad Pitt is deeply engaged by architecture, or that David Duchovny almost finished his literature PhD, or that Christy Turlington studied Eastern philosophy at NYU.

But I think I’ve just stumbled upon the single most impressive bit of celebuscholarship yet: “Frontal Lobe Activation during Object Permanence: Data from Near-Infrared Spectroscopy”. In this paper — published in a 2001 issue of the journal NeuroImage — a group of scientists conducted a pioneering bit of brain-scanning. They took a bunch of infants and used near-infrared spectroscopy (NIRS) to try and probe their mental activity during “object permanence” tests.

Object permanence is, of course, our ability to know that an object still exists even after it’s been hidden from sight. Theorists have argued for years about precisely when an infant develops this ability. The advent of brain-scanning techniques in the 90s offered tantalizing glimpses into mental activity; but it was always hard to scan the brains of infants because most brain-scanning takes place in MRI tubes — and it’s impossible to get an infant to hold its head still inside a tube while subjecting it to funky little mental tasks. (Actually, it’s pretty much impossible to get an infant into a tube without it totally freaking out, let alone holding its head still.)

So the group of scientists in NeuroImage decided to try NIRS instead. NIRS is very cool new technology: Basically, you put a bunch of near-infrared lights up against your head and shine them directly down into the skull. The light penetrates a few millimeters, much the way that if you hold a flashlight flat against your hand you can see the light penetrating your skin. Since your frontal cortex is quite close to the surface of the skull, the near-infrared light actually hits it and bounces off. It’s possible to scan the reflected light and infer how much blood activity — and thus mental activity — is taking place inside the frontal cortex, on a millisecond-by-millisecond basis.

But here’s the thing: Since all you’re doing is strapping a bunch of little lights to somebody’s skull, it would be — the NeuroImage team theorized — possible to finally peer into the brains of small infants. And sure enough, it worked! They produced the first functional images of infant brains, cracking open a glimpse at the emergence of “object permance” in a baby’s brain. As they concluded in their paper:

NIRS is a harmless, noninvasive technique that uses no ionizing radiation or contrast agents, does not require the subject to be lying quietly in a scanner, and makes no noise. Therefore, NIRS is particularly well suited to repeated use in neuroimaging studies of infants and children.

Who was on this crack team of scientists? It was led by Jerome Kagan — a Harvard professor who is a pioneer in infant developmental psychology — but it also included Thomas Gaudette, Kathryn A. Walz, David A. Boas … and the Harvard grad student Natalie Hershlag.

Natalie Hershlag, of course, is better known as Natalie Portman.

I have to say, that’s pretty awesome. An Academy-Award-nominated actress who is also a brain scientist. You can download a copy of the paper here if you want.

A while ago, I blogged about “solastalgia,” which is a fascinating new concept in mental health: It is “the sadness caused by environmental change.” It finally got a chance to interview Glenn Albrecht, the philosopher who came up with the idea, and I wound up writing my latest column for Wired magazine about it. The column is now up on the Wired site, and a copy is permanently archived below!

The next victim of climate change? Our minds

by Clive ThompsonAustralia is suffering through its worst dry spell in a millennium. The outback has turned into a dust bowl, crops are dying off at fantastic rates, cities are rationing water, coral reefs are dying, and the agricultural base is evaporating.

But what really intrigues Glenn Albrecht — a philosopher by training — is how his fellow Australians are reacting.

They’re getting sad.

As I wrote two years ago when my son Gabriel was born, I generally avoid posting about my personal life — with the exception of childbirth. So I can now once again violate my own self-imposed limits, because on Dec. 15, at 3:00 pm, my wife Emily gave birth to our second son: Zev Toby Thompson! That’s him above. On the left. He’s happy, healthy, and makes some really weird noises.

Zev’s impending arrival is the reason why this blog has experienced a simply lunar form of desolation in the last two months. I was frantically working 24/7 to try and get two major features and a half-dozen columns written before I went on a six-week paternity leave, which began this week. Now that I’m on leave, I can actually blog again, and my goodness do I have a backlog!

I'm Clive Thompson, the author of Smarter Than You Think: How Technology is Changing Our Minds for the Better (Penguin Press). You can order the book now at Amazon, Barnes and Noble, Powells, Indiebound, or through your local bookstore! I'm also a contributing writer for the New York Times Magazine and a columnist for Wired magazine. Email is here or ping me via the antiquated form of AOL IM (pomeranian99).

ECHO

Erik Weissengruber

Vespaboy

Terri Senft

Tom Igoe

El Rey Del Art

Morgan Noel

Maura Johnston

Cori Eckert

Heather Gold

Andrew Hearst

Chris Allbritton

Bret Dawson

Michele Tepper

Sharyn November

Gail Jaitin

Barnaby Marshall

Frankly, I'd Rather Not

The Shifted Librarian

Ryan Bigge

Nick Denton

Howard Sherman's Nuggets

Serial Deviant

Ellen McDermott

Jeff Liu

Marc Kelsey

Chris Shieh

Iron Monkey

Diversions

Rob Toole

Donut Rock City

Ross Judson

Idle Words

J-Walk Blog

The Antic Muse

Tribblescape

Little Things

Jeff Heer

Abstract Dynamics

Snark Market

Plastic Bag

Sensory Impact

Incoming Signals

MemeFirst

MemoryCard

Majikthise

Ludonauts

Boing Boing

Slashdot

Atrios

Smart Mobs

Plastic

Ludology.org

The Feature

Gizmodo

game girl

Mindjack

Techdirt Wireless News

Corante Gaming blog

Corante Social Software blog

ECHO

SciTech Daily

Arts and Letters Daily

Textually.org

BlogPulse

Robots.net

Alan Reiter's Wireless Data Weblog

Brad DeLong

Viral Marketing Blog

Gameblogs

Slashdot Games